Distance Based Source Separation

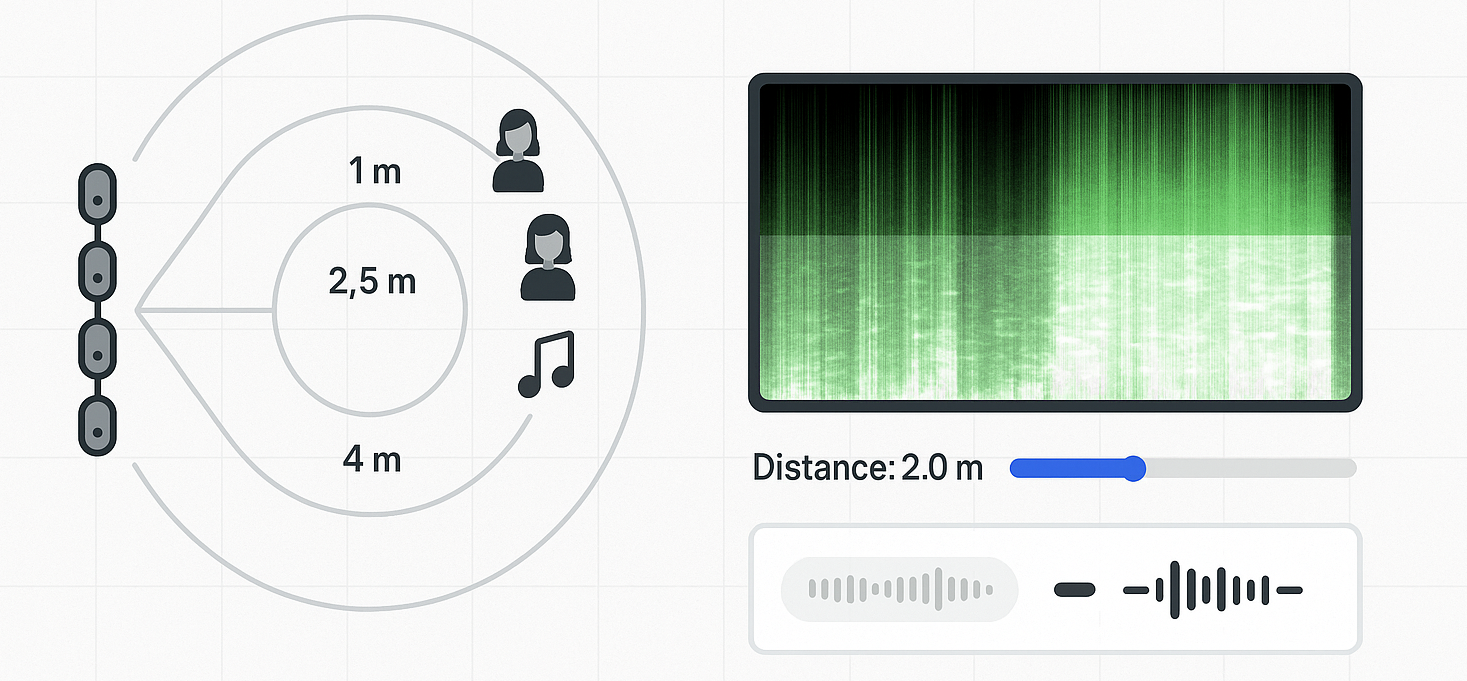

Exploring how multichannel recordings can be used to estimate source distance and separate sounds based on spatial proximity rather than source type.

Exploring how multichannel recordings can be used to estimate source distance and separate sounds based on spatial proximity rather than source type.

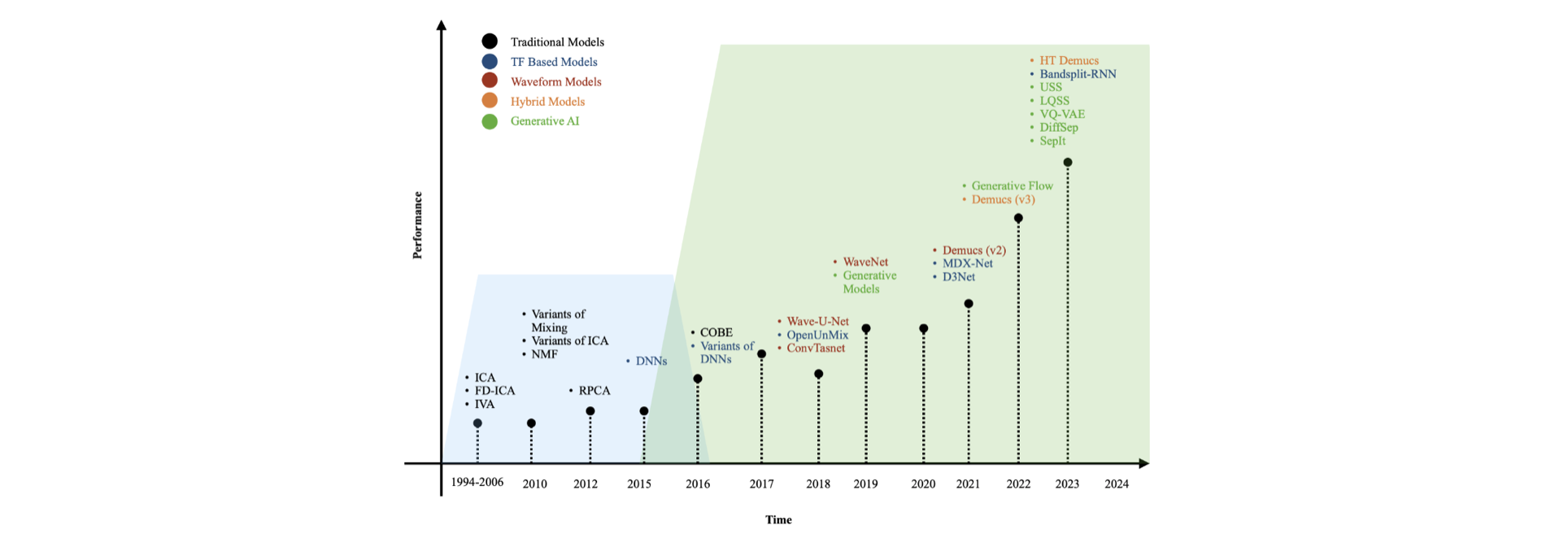

Various methods from optimisation and learning based models were introduced for the task of interference or bleeding reduction in live multi-track recordings.

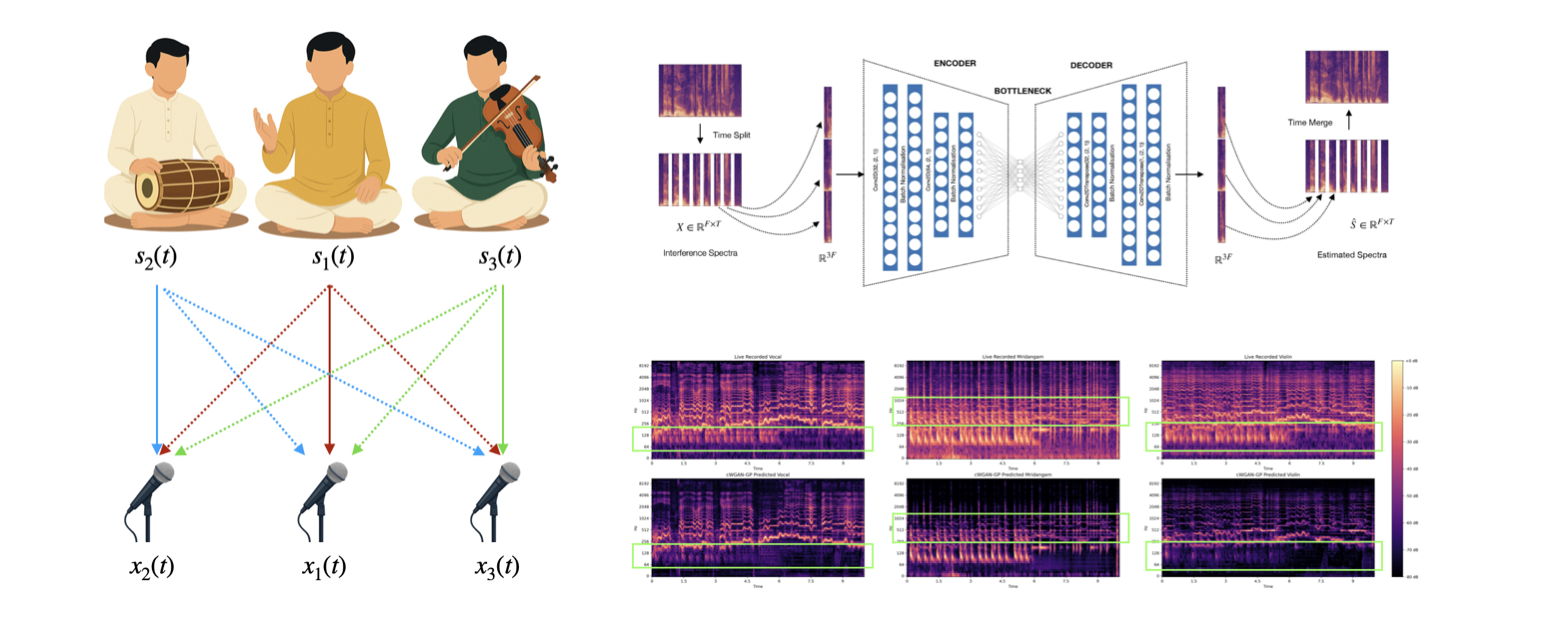

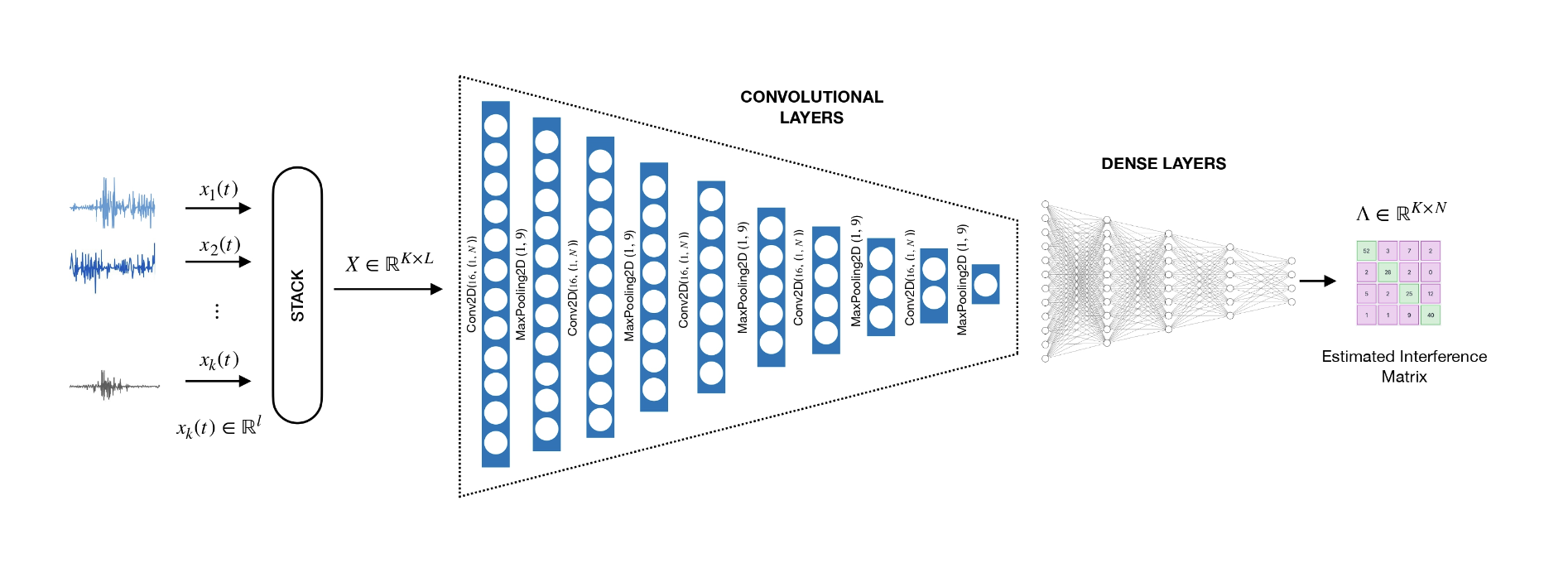

This paper presents GIRNet, a neural architecture that learns relationships between audio channels to suppress interference in multitrack music recordings. It accepts direct raw waveforms and generates interference reduced outputs. The network also shows promising generalizability to diverse acoustic environments and instrument sources or genre. Experiments show improved SDR and faster processing compared to existing methods, with promising real-world listening results.

MS by Research thesis submitted at Indian Institute of Technology, Mandi 2024

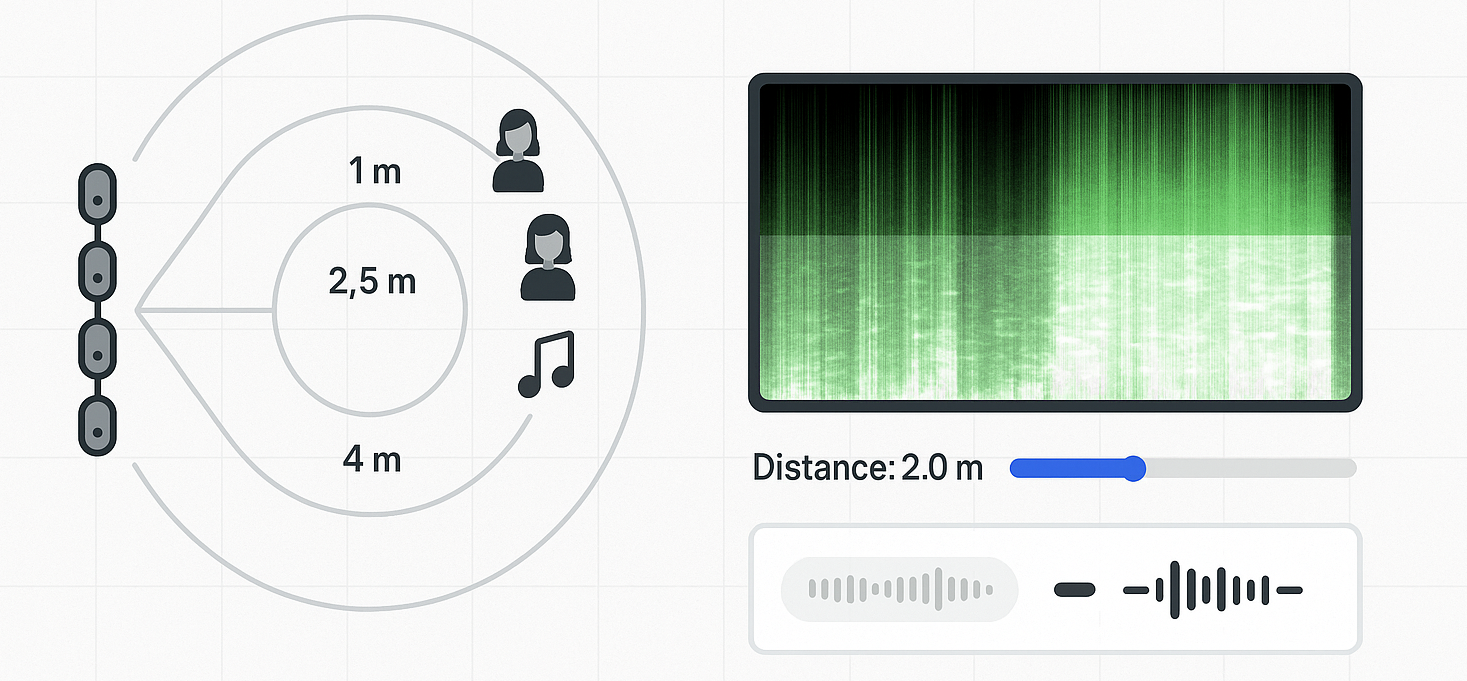

This paper introduces two neural networks for interference reduction in multi-track recordings: a convolutional autoencoder using time-frequency inputs (interference treated as noise) and a truncated U-Net operating in the time domain (interference reduction based on relationship among multi-track data). Experiments show that both models improve music source separation, with the truncated U-Net delivering superior performance and audio quality.

Developing source separation models tailored for Indian Art Music (Carnatic), addressing microphone bleed and complex harmonic–percussive interactions to extract instruments such as vocals, mridangam, violin, and ghatam.